The obsession with SOTA needs to stop

(This is a rough draft. I may do some heavy edits to this over time. Stay tuned.)

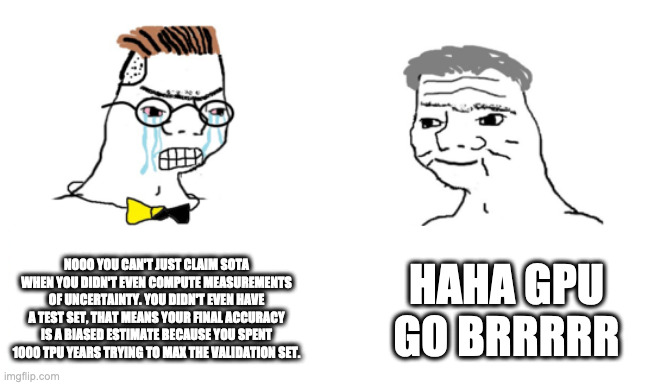

One of my biggest frustrations with machine learning (I guess more specifically deep learning) has been the absolutely abysmal quality of reviews that has come out over the past few years. A very common trope is the rejection of a paper because it fails to ‘beat SOTA’ (state-of-the-art), amongst many other critiques (‘lacks novelty’, ‘too theoretical’, etc.). I want to specifically vent about the obsession of SOTA and why I think it is not only stupid, but incentivises bad research.

To start off, I want to get off my chest that I don’t have a problem with SOTA-chasing per se as a research contribution. If you come from an engineering mindset where you want to maximise the performance of some model on a real-world setting then sure, you would place lots of emphasis on obtaining state-of-the-art performance. We want to have the best performing algorithms deployed in the real world, else self-driving cars might drive off of cliffs and kill their owners. Obviously, there are many other research contributions one could pursue: optimising for other useful metrics (e.g. memory footprint, inference speed), comparing algorithms, performing ablations, testing hypotheses, new perspectives, and so forth. My main issue lies with reviewers (and authors) placing exhorbitant emphasis on “SOTA”. There are two main reasons why I have absolute disdain for ths:

- (1) There is more to research than beating SOTA (duh, see the paragraph I wrote above on all the other things you could work on);

- (2) comparisons between algorithms in the literature are generally flaky and often times not statistically significant.

In my experience reading papers in machine learning, many authors appear to lack a basic understanding for how to properly evaluate a machine learning model – or, maybe they do but want to appease silly reviewers who don’t know how to do it. After all, the ultimate unit of currency in academia is the (published) paper and this often incentivises sloppiness. Here are all of the things I have seen people do (or not do) when it comes to papers proposing SOTA contributions:

- (a) There is no distinction between a validation and test set. Ergo, the validation set is the test set and authors basically just tune hyperparameters on the test set, leading to optimistic estimates of generalisation performance.

- (b) Strawmanning the baseline and steelmanning the proposed algorithm. Because there is so much emphasis on ~novelty~ in the field, one is strongly incentivised to spend all of their hyperparameter tuning budget (GPUs) on squeezing every little bit of performance out of their proposed algorithm, when the same may not have been done for the baseline.

- (c) No estimates of uncertainty are computed for the (supposedly better) proposed algorithm. So what if your method does 95.09% and the previously SOTA method was 95.01%? It could very well go in the opposite direction over multiple runs of the same experiment (more on that soon).

- (d) The issue of confounding variables. Often times papers will simply just quote numbers from other papers. While it is certainly pragmatic to do in a very fast-paced field, there will often be many confounding variables because the experimental setup of the paper you are comparing against is completely different. Maybe they are using a different framework, maybe they have preprocessed the data differently, maybe a different optimiser was used, and so forth. I am not discouraging against this, but rather saying that if you decide to go with that approach everything needs to be taken with a grain of salt. This means that as an author, you need to be extremely modest with your claims of SOTA, considering how many confounding variables you did not control for. As a reviewer, this means that if the proposed algorithm gets a few percentage points lower than SOTA then you need to ask yourself: what exactly are the paper’s claims? If the paper is claims to beat SOTA by 5% and that is literally the only proposed contribution, then sure, you’d probably be justified in rejecting it either on the grounds of statistical flakiness (i.e. cofounders). Either way, there usually there are other contributions in a paper and these need to be evaluated against the authors’ claims and done so in a holistic manner. If the paper supposedly is worse than SOTA by 5% but is not central to the paper and/or the authors don’t claim to beat it, why are you arguing for its rejection based on the fact that they didn’t beat it? Shouldn’t the positive and negative points of a paper be weighted in proportion to the claims associated with those points and the results associated with those claims?

Both authors and reviewers are often ignorant of the above points, though my criticism lies more with reviewers because they are meant to be the gatekeepers of the literature (and I mean gatekeeping in a good way, not the malevolent elitist way). When reviewers are ignorant of it and are obsessed with SOTA, they end up accepting papers that claim SOTA but whose claims are barely supported statistically. This just adds noise to the literature and makes life harder for the honest researcher who is actually trying to beat SOTA in a a principled manner. Maybe that honest researcher finds that at the end of the day none of the algorithms compared really do any better than the other, but reviewers won’t care about their paper because ~novelty and SOTA wins above everything else~. On the other hand, reviewers can also end up rejecting papers on the basis of not beating SOTA because they value it disproportionally at the expense of all of the other interesting contributions and results that the paper may have proposed (see bullet point (e)).

Extra rambles

Furthermore, on the topic of measurements of uncerainty: what is the uncertainty being computed over (when confounding variables are controlled)? Computing uncertainty (variance) over random initialisation seeds is completely different to say, random subsampling or cross-validation over your training set. In the former case, one is measuring the behaviour of the algorithm when subjected to random initialisations: if algorithm A gets 85% +/- 5% accuracy and algorithm B gets 92% +/- 10% accuracy, then this would indicate that B is less stable and would probably need more repeated training runs so that we can select the model which performs best on the validation set. If we are randomly subsampling our data, then we are essentially measuring the stability of the algorithm with respect to what might happen if one were to collect the data in practice. For instance, if we performed cross-validation and algorithm A obtained 85% +/- 20% and algorithm B obtained 85% +/- 5%, then implementing and running algorithm A on our own dataset is a whole lot riskier since it may only give us an accuracy of 60% simply by chance (i.e. one standard deviation below the mean). I am bringing this specific example up because as an author, you may propose an algorithm which performs better with respect to dataset uncertainty than seed uncertainty, but in today’s day and age I don’t even think enough reviewers are nuanced enough (or simply care) to take this into account.

Conclusion

That is all for now.